Facebook’s vs Twitter’s Approach to Real-Time Analytics

Last year, Twitter and Facebook have released new versions of their real-time analytics systems.

In both cases, the motivation was relatively similar — they wanted to provide their customers with better insights on the performance and effectiveness of their marketing activities. Facebook’s measurement includes “likes” and “comments” to monitor interactions. For Twitter, the measurement is based on the effectiveness of a given tweet – typically called “Reach” – basically a measure of the number of followers that were exposed to the tweet. Beyond the initial exposure, you often want to measure the number of clicks on that tweet, which indicate the number of users who saw the tweet and also looked into its content.

Facebook’s vs Twitter’s Approach to real-time Analytics

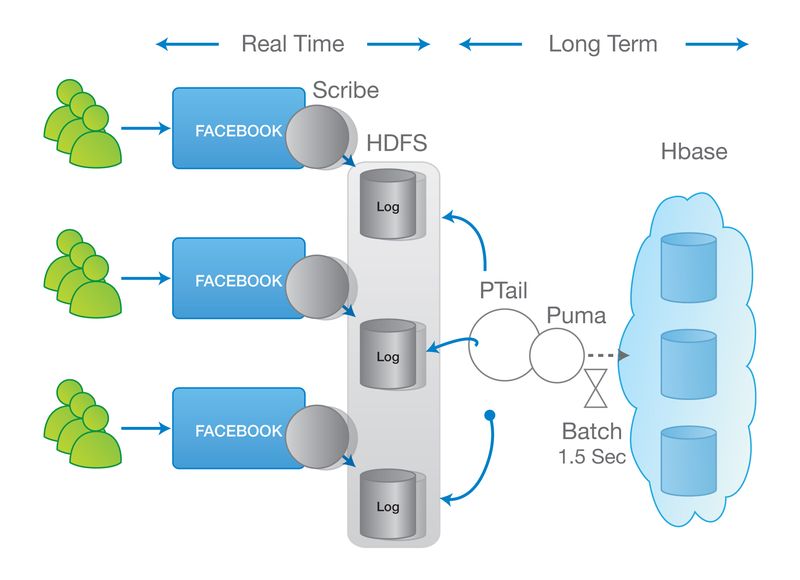

Facebook Real-Time Analytics Architecture – Logging-Centric Approach:

Relies on Apache Hadoop framework for real-time and batch (map/reduce) processing. Using the same underlying system simplifies the maintenance of that system.

- Limited real-time processing — the logging-centric approach basically delegates most of the heavy lifting to the backend system. Performing even a fairly simple correlation beyond simple counting isn’t a trivial task.

- real-time is often measured in tens of seconds. In many analytics system, this order of magnitude is often more than enough to express a real-time view of what is going on in your system.

- It is suitable for simple processing. Because of the logging nature of the Facebook architecture, most of the heavy lifting of processing cannot be done in real-time and is often pushed into the backend system.

- Low parallelization — Hadoop systems do not give you ways to ensure ordering and consistency based on the data. Because of that, Facebook came up with their Puma service that collects and inputs data into a centralized service, thus making it easier to processes events in order.

- Facebook collects user click streams from your Facebook wall through an Ajax listener which, then, sends those events back into the Facebook data centers. The info is stored on Hadoop File System via Scribe and collected by PTail.

- Puma aggregates logs in-memory, batching them in windows of 1.5 seconds and stores the information in Hbase.

- The Facebook approach puts a huge limit as to the volume of events that the system can handle and have significant implications over the utilization of the overall system.

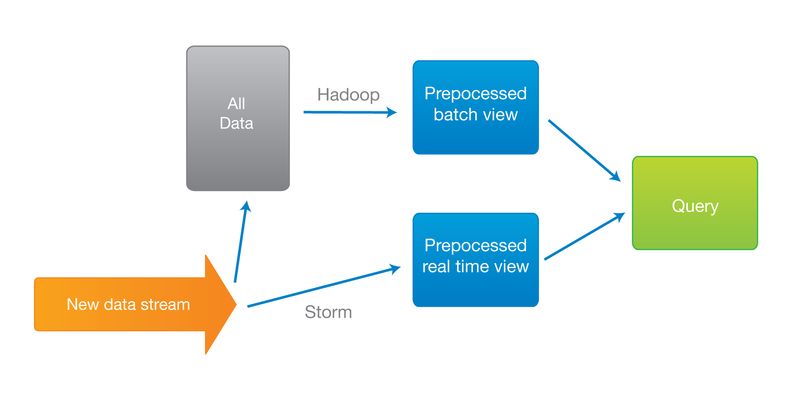

Twitter Real-Time Analytics Architecture – Event-Driven Approach:

- Unlike Facebook, Twitter uses Hadoop for batch processing and Storm for real-time processing. Storm was designed to perform fairly complex aggregation of the data that comes through the stream as it flows into the system, before it is sent back to the batch system for further analysis.

- Real-time can be measured in milliseconds. While having second or millisecond latency is not crucial to the end user — it does have a significant effect on the overall processing time and the level of analysis that we can produce and push through the system. As many of those analyses involve thousands of operations to get to the actual result.

- It is suitable for complex processing. With Storm, it is possible to perform a large range of complex aggregation while the data flows through the system. This has a significant impact on the complexity of the processing. A good example is calculating trending words. With the event-driven approach, we can assume that we have the current state and just make the change to that state to update the list of trending words. In contrast, a batch system will have to read the entire set of words, re-calculate, and re-order the words for every update. This is why those operations are often done in long batches.

- Extremely parallel – Asynchronous events are, by definition, easier to parallelize. Storm was designed for extreme parallelization. Ultimately, it determines the speed level of utilization that we can get per machine in our system. Looking at the bigger picture, this quite substantially adds to the cost of our system and to our ability to perform complex analyses.

Final Words

Quite often, we get caught in the technical details of these discussions and lose sight of what this all really means.

If all you are looking for is to collect data streams and simply update counters, then both approaches would work. The main difference between the two is felt in the level and complexity of processing that you would like to process in real-time. If you want to continuously update a different form of sorted lists or indexes, you’ll find that doing so in an event-driven approach, as is the case of Twitter, can be exponentially faster and more efficient than the logging-centric approach. To put some numbers behind that, Twitter reported that calculating the reach without Storm took 2 hours whereas Storm could do the same in less than a second.

Such a difference in speed and utilization have a direct correlation with the business bottom line, as it determines the level and depth of intelligence that it can run against its data. It also determines the cost of running the analytics systems and, in some cases, the availability of those systems. When the processing is slower there would be larger number of scenarios that could saturate the system.

(source: Nati Shalom’s blog)

No comments:

Post a Comment